"The truly scary part of AI... is not the thought that we can be replaced by unfree rule-following machines. It’s the intimation AI provides that we ourselves might be unfree as well."

In February 2023, Australia’s corporate regulator came under fire for hiring a bunch of robots. You might be envisioning a Robocop-type scenario or an army of droid soldiers right now, and I can guarantee you’re about to be disappointed. The robots in question were just artificial intelligence (AI) software programs. Yet they still managed to cause considerable unease.

This particular department receives a huge volume of written complaints about company directors. Determining which of these complaints warrant using time and resources to investigate is itself a time-consuming process. So, to speed things up and to free up staff, the department started using an AI system to decide which complaints to take further, and which to politely decline. Other organisations use AI to do similar sorting tasks, such as pre-vetting job applications.

The problem in this case was that the AI was too efficient. People submitting complaints noticed they would sometimes get a decision not to investigate in less than a minute – much too fast for any human to have read, considered, and carefully decided how to respond to the complaint. Whether the computer got it right or wrong was beside the point; people were offended that AI was making the decisions, not that the decisions were bad ones.

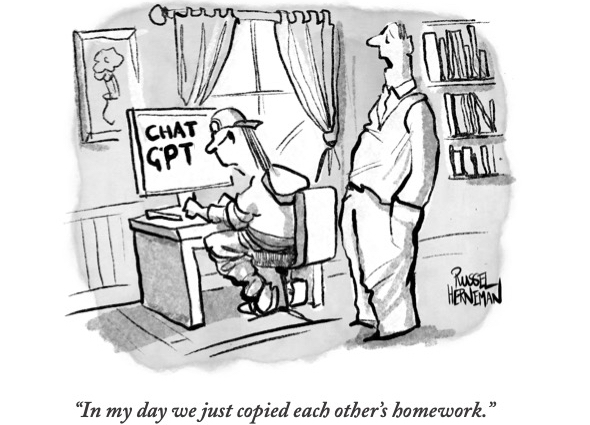

The story emerged at a moment when anxieties about artificial intelligence were spreading almost as rapidly as the technology itself. Suddenly, AI programs are now available to regular folks, at least as toys. Asking a computer to draw you a panda sword-fighting a unicorn on the moon, or to write anything you want from a simple prompt, is irresistibly good fun – even if it comes with the faint sense that you’re auditioning and training up your own replacement. Yet when it comes time to lodge a formal complaint, it seems people still want to know that a flesh-and-blood person is considering the case.

But why should we prefer a human to a robot when it comes to making bureaucratic decisions – or, for that matter, creating artwork? Why should I commission an artist to produce an image for me if artificial intelligence can do the job acceptably well at a fraction of the cost? If a computer can say ‘no’ just as effectively as a bureaucrat, why insist on the human touch?

It's likely there are several reasons why we’re troubled by handing such tasks over to machines. Some are practical, such as worries about employment, though AI is hardly the first technology to threaten people’s jobs. Others are to do with the essential human experience that Martin Buber beautifully called “the lightning and counter-lightning of encounter”. Something profound happens when we come face-to-face with a fellow conscious being. There is, Buber wrote, a radical difference between seeing something as an ‘it’ and as a ‘you’. As Jean-Paul Sartre put it, the presence of another consciousness quite literally changes the world. Alone in a park, I see a world before me made up of objects whose positions I can describe in relation to me. But as soon as I notice someone sitting over on a park bench, a whole new perspective on the world appears, oriented around them instead of me.

Sartre spoke of ‘the look’, the experience of being seen, that discloses to us both the existence of other minds and our own object-hood. Experiences like shame, pride, or embarrassment are only intelligible if there are other conscious beings in the world to turn us into objects of scorn, envy, or pity. We cannot be embarrassed in front of mindless objects. Yet we cannot feel seen, validated, or vindicated by such objects either – including digital objects. These experiences depend upon the other we encounter having a first-person perspective, having sentience. Artificial intelligence does not. It has no experience or inner life of its own. Wittgenstein once called consciousness “the light in the face of others”. When it comes to AI, the lights are on, but nobody’s home.

But there might also be another, darker reason why we’re offended by AI. Perhaps it’s not about what AI lacks, but about what AI might show that we lack too. For all the dystopian talk of AI replacing humans, much of the discourse is on some level surprisingly optimistic. For every voice worrying that the coming of the bots will render humans obsolete, there are others reminding us that ‘artificial intelligence’ is not really intelligent at all. Peek under the hood and AI is just a series of algorithms – clever, impressive algorithms indeed, but nothing more. You cannot get more out of AI, we’re told, than what you’ve put in. That in turn means that everything an AI does is ultimately predetermined and predictable. If we know the algorithmic rules well enough, and we know all the inputs, in theory at least we could tell exactly what the AI will do.

It’s different with us though – right? There’s some uniquely human spark that lifts us above the law-constrained realm of mere matter, something which AI does not and can never share with us. AI lacks the free will and creative spontaneity that uniquely characterises the human mind. You might be able to make an AI churn out images that look like Rembrandts, but whatever it produces can only ever be a reworking of data from the past following pre-set rules. It takes Rembrandt to make a real Rembrandt, precisely because only Rembrandt can make a new Rembrandt.

So an AI, we tell ourselves, can only ever be an imposter, no matter how superficially convincing it is. We’re affronted at the idea of a mindless computer painting Van Goghs or writing essays or triaging complaints not because we think they’ll do a bad job, but because we think they’re missing the originality that only humans, with their free and creative spirits, can bring to these tasks.

But what if that all turns out to be untrue? If a computer can paint Kermit the Frog riding a jet ski in the style of Vermeer just as well as any human artist, what makes human intelligence special? After all, we devote a great deal of our time and energy to studying, predicting, and manipulating human behaviour precisely as if it were unfree. Whole fields of study and practice attempt to understand the ways in which the human mind works – whether in order to help the unwell, persuade voters, or help corporations sell more products – on the assumption that minds are at least somewhat predictable, law-governed mechanisms. We sing songs of praise to the freedom of the human spirit and its unique imaginative genius, yet perhaps those songs themselves are just as predetermined and algorithmic as the ones we ask AI bots to write for us for a laugh.

In other words, the truly scary part of AI, the thing that might underlie our disquiet, is not the thought that we can be replaced by unfree rule-following machines. It’s the intimation AI provides that we ourselves might be unfree as well. Perhaps that fear is premature. Maybe AI’s limitations will always show that there truly is something unconstrained, precious, and irreplaceable about being human. Let’s hope so. Until then, we’re stuck with the nagging worry that AI will instead look under our own hoods and expose the mere machines within.

From the Intelligence edition, which can be purchased from our online store