Zan Boag: To start with, could you explain a little bit about what deepfakes are, how the technology works, when it is used, and how advanced the technology currently is?

Regina Rini: A deepfake is a fabricated recording, audio and/or video, that uses machine learning techniques to superimpose one person’s face or voice onto that of another person to create an apparent recording that appears to be someone doing or saying something they never actually did or said. And the technology is pretty advanced – the key point to keep in mind is the more powerful a computer you have, the more time you have, the more money you have, the better a deepfake you can make.

Until about five or ten years ago no one could do this other than giant Hollywood studios with enormous computer image budgets. But what’s changed in the last five years is that the technology has advanced to the point now where people can do this for free on their cell phones. It’s not going to be the absolute highest quality, it’s not going to be Hollywood special effects quality, but it’s going to be pretty good quality, relatively speaking.

And so that’s the key shift that’s happened, a technology that had been around for a couple of decades in terms of computer graphics has now become cheap enough that ordinary people can do it to ordinary targets. It’s not something that’s just for Hollywood studios or intelligence agencies, it’s something that random trolls on Reddit can do.

In ‘Deepfakes and the Epistemic Backstop’, you look at the introduction of this technology. What prompted you to begin your research into all of this? Does someone you personally know, have they been affected by this, or is it motivated by politics? Is it something you’ve looked at in the media, and thought that this could be an issue to come, that will arrive in the future for all of us?

I learned about this from a former graduate student, Leah Cohen, who was working with me on the research of disinformation. I’d written before about fake news, about disinformation in text form, but in early 2018, before most people had heard of deepfakes, she brought to my attention that there was this emerging new phenomenon.

In numerical terms, the big concern is the use of deepfakes for pornography, for revenge porn. People on the internet will superimpose the faces of their ex-girlfriends, of someone they don’t like, et cetera, et cetera, into porn. They produce an unconsenting pornographic image, and they do it intentionally either for their own gratification, or to mortify the person they’re targeting.

And so, Leah and I started off thinking about the ethics of this, the harm that this does to the targets of deepfakes. We have a paper on these ethical concerns, ‘Deepfakes, Deep Harms’, that will be coming out soon in the Journal of Ethics and Social Philosophy. My ‘Epistemic Backstop’ paper, which was published first, is a separate paper specifically about the epistemic implications.

You said that the purpose of this paper was to raise concerns about deepfakes, and the erosion of knowledge in democratic societies, before it begins to happen. We had a US presidential election in 2020. Did deepfakes play any part in the election? That is, are we already starting to see the erosion of knowledge that you feared would come to pass?

Luckily, deepfakes don’t seem to have played much, if any role in the 2020 US presidential election. I’m not aware of any high-profile case where a deepfake influenced anyone’s vote. However, I am starting to see some early warning signs of the thing I’m afraid of. I’ve seen a few debates on Twitter now where there is an actual, real video of someone doing or saying something stupid or offensive, and then people in the comments are saying, “Are you sure this isn’t a deepfake?” And that’s starting to happen. That’s the thing I think is ultimately the scary possibility, and I think that will be accelerated once we start seeing some real political deepfakes appearing in some election, whether it’s in the US in 2024 or in some other country before then. But we’re starting to see what sounded to me like very distant drums of this problem approaching.

I’d like to come back to that erosion of trust in video technology a little bit later. But first, I’d like to talk about elections and the creation of deepfakes with the intention to deceive the public. Now, let’s say we were to see a video of a presidential candidate colluding with another country to interfere with an upcoming election. Of course, it might be hard to believe that the video is real because, as you say, many political actors, domestic and foreign, would have strong motives to create such a tape, regardless of its underlying truth. That aside, is the technology advanced enough to deceive? And if not, how long until it will be sufficiently advanced to deceive people?

It depends on what you mean by deceive. The technology’s already sufficiently advanced to produce a video that would stand up to at least a day or two’s scrutiny. It would produce arguments. People will start arguing instantly, is this real or not? And my guess is in most cases, eventually there would be some sort of debunking. Someone, either through technical means, or by catching some logical detail that doesn’t make sense like, “That shouldn’t be in the background in that picture. It doesn’t fit chronologically,” or something like that. People eventually start debunking these things. But the question is, how long does the debunking take?

If you think about really bad video-editing software, the debunking is instantaneous. You watch it, and you can instantly tell this is nonsense. But the problem with deepfakes is that the debunking will take longer and longer. And that process – that the public fight over whether or not to trust the deepfake – is the whole problem. It’s the fact that there will be sides chosen of whether or not to believe this piece of videotape.

And then, once the debunking finally emerges, there’ll be some people who are so dug into their partisan side, they will refuse to believe the debunking, but continue to believe the videotape anyway, even though there’s now clear evidence that it was faked. The problem is not so much whether these videos can stand up in the very long-term to debunking, the question is what chaos do they cause on the way to that happening?

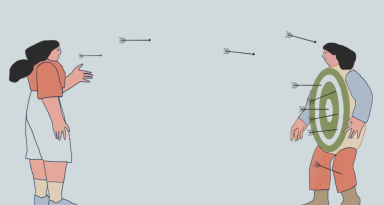

You write also about the use of deepfakes to discredit people. It doesn’t necessarily, as you say, need to be believed for it to have the desired effect. You use the example of somebody trying to discredit the governor of Chechnya. Now, the motive in that instance was not to deceive, but rather to discredit the governor. Obviously, this is going to pose some sort of risk to prominent people. But as you say, if this technology is available on people’s smartphones, it’s going to affect everyone. Anyone who may have an enemy of some sort, this deepfake technology can be used against them. When it comes to being discredited, do you see this as more of a risk for prominent people, or for the everyday person?

Oddly, I think the risk of being lastingly discredited may apply more to ordinary people in the long term, because there’s fewer resources, and fewer people attending to debunking videos of ordinary people than to videos of public figures with partisans on their side.

In terms of pure social chaos, putting up a fake video of Donald Trump, or Joe Biden, or Boris Johnson, or whomever, that’s going to cause a huge amount of fighting, but that will hopefully eventually be settled in some forensic way. Whereas, putting up a fake video of you or of your cousin, there may be nobody in your social circle who has the tools to debunk that video ever, with any amount of time.

And so, I think in the long term, this has really scary consequences as well for ordinary people. Though most of us will probably see it first on some high-profile case involving a celebrity or a politician.

Now, with celebrities and politicians, you said that they have the resources, or at least there’ll be a discussion on this, and the general public will try to debunk the deepfake. But you made a very good point in that the debunking never travels as far as the original. What does the use of deepfakes do for our trust in video recordings?

My big worry, the key point in the paper, is that I think recordings have played an unnoticed role in our public epistemic practice. Their function is what I call an ‘epistemic backstop’. A lot of what we learn about the world is from people telling each other things, what philosophers call ‘testimony’. I tell you such and such happened, you tell me such and such happened, and our trust in that kind of evidence comes from whether we trust the person saying the thing.

And I think recordings have functioned in the background to make us all a little bit more careful about the kind of testimony we give. Because we know that for public events, there’s the possibility that some recording will emerge to show that we’ve exaggerated, or lied, or misremembered. And it’s given us all a background reason to just be a little bit more careful, and not exaggerate, and to be honest when we’re unsure about what happened. That sort of thing.

And so, the worry I have is that if the reliability of recordings themselves becomes highly controversial, if it becomes doubted widely, because we’ve seen a bunch of deepfakes being exposed and debunked, then over time, that background function of recordings to keep us all honest will start to fade. And so, our incentives to trust each other to tell the truth, more or less, most of the time, will similarly fade over time.

And this you refer to as “the passive regulatory role of recordings”, which is more important than the “acute corrective” role. As you would be aware, Jeremy Bentham invented the Panopticon – a prison, with a tower in the middle; the prison was a circular structure. Everybody thought they were being watched, so their behaviour was modified accordingly. Do video recordings operate in a similar sort of way – or, at least, did they up until the advent of deepfakes – in that we feel like we’re being watched, so we modify our behaviour?

I think that might be true about public spaces, especially in countries like the UK or China, where there are CCTV cameras everywhere. And increasingly, I think it’s true even in relatively private spaces, because people carry their cell phones everywhere, and you never know when somebody standing right next to you just happens to have their phone on, just happens to be streaming themselves on some platform.

In any case, I think we’re increasingly getting used to the idea that the possibility of being recorded is always there. I don’t know if it’s sad, or if it’s just strange that we’re at this historical moment where the pervasiveness of recording technology is reaching a point like Bentham’s Panopticon idea, and at the same time, the threat of deepfakes will erode that same ability of the possibility of recording to regulate our choices. It’s these two historical moments crashing into each other, and we’re probably going to live through that in the next decade or two.

You say that the very fact that we could be recorded provides a strong reason for people to tell the truth. What happens, as is the case with the existence of deepfakes, when the veracity of such recordings can be thrown into doubt?

We can think about this in terms of obvious implications and less obvious ones. And an obvious one is something that the legal theorists Danielle Citron and Bobby Chesney have called “the liar’s dividend”. It’s this idea that political figures could, from this point forward, not care so much about what they say in public, because they can always claim later that it was just a deepfake. If they get caught, if they mess up and admit something they didn’t mean to admit, or if they say something incredibly offensive in front of a camera, et cetera. If they can credibly claim, “It was just a deepfake. My political opponents manufactured that,” they then have the ability to be much less careful. And I can imagine this getting much worse. Imagine, say, a politician engaging in overt race baiting in front of a nationalist crowd, intending to rally that nationalist crowd, and then later on telling the general audience, international audience, “I never did that. The recording of me doing that in front of the rally was deepfaked.” It provides an ability for public figures to manipulate audiences at two levels at once. There’s no longer the check of the external audience looking in through your recording on what’s going on in this scenario, because the external evidence can be discounted.

I think those are the obvious implications for truth-telling. The less obvious ones are for the rest of us, who don’t have the same sneaky motives of politicians, but who nevertheless, I think, we do find our choices being increasingly regulated by the background possibility that there is a casual recording of us going on somewhere. Or if not a casual recording of us, a casual recording of the event that we are testifying to. Even if I wasn’t being filmed when I told you what happened last Thursday on the street corner, maybe somebody was filming what happened last Thursday on the street corner, and that video might emerge later.

As we become aware that recordings of what happened last Thursday on the street corner can be doubted, then our need to be very, very careful starts to fade. And my thought is not that we’re all going to turn into these Machiavellian politicians, constantly lying, it’s just that we’re going to indulge natural human tendencies to exaggerate, and to slide the truth a little bit in our own favour. The same sort of stuff people already do, it’s just that one of the safeguards against us doing that will start to fade away.

This is quite a revolutionary moment when it comes to truth. Because as you note in your paper, during the lifetime of everyone currently alive, recordings have been there. And it’s ramped up over the course of the last few decades in that there’s more CCTV, and people do have smartphones. There’s much more perceptual evidence there, which will back up or debunk whatever it is that we may claim to have happened. With court proceedings, legal matters, and criminal activities, video evidence has often been used against people in a court of law. What’s going to happen there? Will video evidence no longer carry the same weight?

That’s going to be really hard, and forensic computer scientists and legal theorists are going to have to figure out new standards of evidence. One thing to think about, and I know folks are already working on this, has to do with the idea of a digital chain of custody, where for a videotape to be strong evidence of facts in a court of law, there needs to be meta documentation of that digital file: how it was recorded, when it was recorded, who has had access to the file over time. Basically, much more care is going to have to be taken with the source of recordings.

What this means is a lot of the kind of recordings that we might think about as a casual or even democratised recording won’t have that same chain of custody. They won’t have that same reason to trust. And this is sad because some of the best examples we have of the use of democratised recording have to do with police brutality. You think about so many recordings of police mistreating people of colour. Especially in the United States, those recordings have played a huge role in making the public believe what it didn’t want to believe before, that this was happening.

And the worry is that in the future, when ordinary citizens record these things, they’re not going to be able to keep track of that same high level of proof, that digital chain of custody on their cell phone. And then, they won’t qualify for the future reasonable standards of evidence that will be needed once we’re afraid of deepfakes.

Yes, in the past recordings supported or corrected testimonial evidence. Does this mean that recordings, or a large proportion of recordings, might be demoted to mere testimonial evidence?

I think the likely possibility here is that recordings will come to occupy a similar sort of social role. You can trust what the recording shows you if you trust the person who made the recording, but not if you don’t. If you don’t know who made the recording, or if you don’t think they’re a trustworthy person, you should probably start to ignore recordings. Maybe not yet, but we’re getting there with the technology. We’re getting to a point where you should come to ignore the recording, unless you trust that person.

You can see how this matters, because we’re not quite there yet. Right now, you see videos on the internet, you don’t know who made them or how they got there. And at least by default, you take them at their word, as it were. You take the video for what it shows you. Hopefully you’re a bit sceptical, and you apply critical thinking, but you don’t assume the thing was literally made up on a computer. And in the future, you should assume that, or you should at least allow that as a sceptical possibility until and unless you know the chain of custody for that video.

Prior to the video becoming so prominent in society, photographs acted as an epistemic backstop for testimonial evidence. That was then displaced by the use of video recordings. Now, photographs have been able to be manipulated for decades with the use of photo manipulation software such as Photoshop, and that hasn’t necessarily had a detrimental effect on our trust in photographs. We seem to be able to discern a fake from a real photograph. Again, as you say, we base our judgment on the sources, and the likelihood of it being true. What makes deepfakes different to the threat people thought was posed by photo manipulation software?

It’s a good question to ask, and it is true that, especially before videotape became cheap, it’s true that photographs were the main thing performing this kind of technological backstop role. And yes, it’s good to ask, what’s the difference here?

I think one important difference is that by the time cheap and easy faking of photographs, like Photoshop, became available in the 1990s, we already had videotapes stepping in to take over the epistemic backstop role. We haven’t lived in a world where even photographs could be cheaply and easily faked without something else there to serve the same role.

Further, videotape, because it’s chronologically extended, has more credibility than photographs ever did. We all know that if you take a single still photograph, and you frame it just right, you stand at just the right angle, you get the light in just the right way, you can make a scene misrepresent the way things were for just that one split second, even without any fancy photo editing. You can get a split second of somebody’s facial expression when they were yawning, and you can make it look like they were yelling instead. We all know that.

And part of what’s always been going on is that if one single still image were incredibly controversial, if you’re disputing whether it’s veridical, people would ask, “Well, is there video? Can we see the frame just before and the frame just after? Could we watch the 10 seconds on either side and see, is this still frame representative of what’s going on?” And the problem now is that we’re reaching a point where video similarly can’t be trusted, where that chronological extension is not a stronger guarantee of veridicality.

It raises one thought for me here: is there the fear that the next step, if we can’t trust video recordings to serve as the epistemic backstop for testimonial evidence, is there the fear that it will come to the point where governments say, “Well, this is why we need CCTV everywhere. We need to be record everything everywhere, all the time, and that will act as the ultimate proof.” It could serve as an excuse for governments, institutions, or organisations to put in place CCTV everywhere so we are recorded all the time. Is that a real fear?

Some governments are already doing that. I mentioned the UK and China both have enormous numbers of CCTV cameras per capita; they already have them in place. And those are two very different governments, very different societies, but they already lead the world in CCTV numbers. That was happening before deepfakes. Given that we’re already on trend to be constantly recording public life, you’re right that some societies may respond in this way.

Can I add an additional fear into that? Another layer for you. What if CCTV does get put in place by governments and they say, “Well, we have this recording. But this is the only one we can trust. Whatever you record is testimonial evidence, and whatever we record is proof.” Obviously, that opens up a whole range of different issues, where only the government’s video testimony can be trusted. Because obviously, that can be manipulated, too.

Yes, I agree. That’s a scary possibility. There’s something Orwellian in that. Because if there is a standing scepticism about all recording evidence, except for these special sources of monopolistic ‘trustworthy’ recordings, then of course the temptation for people who want to do bad things is to get control over the monopolistic source of trusted recordings. And so, yes, that would be very scary.

The problem is that we can’t avoid that, to some extent, with legal evidence. If we’re going to allow recordings to play any role in legal evidence, we’re going to have to be able to identify trusted sources. And the strategy has to be to figure out a way to make that not just a matter of the government, or just some one company. We definitely don’t want that. We need something more distributed.

Some people have talked about using, for example, blockchain as a way to track the trustworthiness of recordings. I haven’t checked on this lately, but I think that has faded a bit when we start to realise that blockchain is, over long periods of time, computationally expensive, and there are questions about whether that’s a good use of resources. If you imagine that every single video recording made anywhere in the world, and it all needs to be embedded in some blockchain or other. And maybe it’s doable. I’m not a computer scientist, but that sounds like a bit much to expect.

So, some strategy for figuring out ways of establishing trusted sources of recording evidence that are not controlled in a monopolistic fashion by just one source of power, that’s going to be crucial for figuring out what to do about this. At least for legal evidence, if not for public consumption in the future.

In the short term – before we arrive at this Orwellian nightmare of being recorded everywhere at all times, where the government controls everything – is truth, which is already on shaky ground in politics and business, is it likely to be a victim of deepfake technology in the short term?

Perhaps surprisingly, my view is that deepfakes don’t fundamentally change the way truth is in trouble, because it is already in a lot of trouble. I think deepfakes add an extra layer of complication, and they’re going to cause lots more political fighting, but there is a sense in which truth has been under attack for quite a while actually, for a decade at least, probably more, and other forms of digital technology were already eroding it.

The one I’m most scared of right now, which hasn’t even really happened yet, is natural language model artificial intelligence, like GPT-3, which uses machine learning to automatically create English texts. In a way, it’s like deepfakes for newspaper articles, or for encyclopaedia entries. It can generate very plausible sounding text that makes up a story, and at least sometimes a human reader won’t be able to tell that a computer wrote it.

What that would allow is a kind of spam fake news, just absolute mass production, drowning all our communication channels in enormous quantities of fake news. Most of it will be obviously fake to all of us, but we’ll be so overwhelmed by the sheer quantity of it that it’ll become harder, and harder, and harder to even find truthful news anywhere. And so, that’s something that really scares me. And against that background, I feel like deepfakes are probably not the worst thing we have to worry about. Although, they’re definitely part of this menagerie of scary things that are aimed at ending our ability to find truth.

From the 'Truth' edition of New Philosopher, available from our online store.

Regina Rini is the Canada Research Chair in Philosophy of Moral and Social Cognition at York University in Toronto. Rini writes about moral agency, moral disagreement, the psychology of moral judgment, partisanship in political epistemology, and the moral status of artificial intelligence. She is the winner of the 2018 Marc Sanders Award for Public Philosophy and has written for The Times Literary Supplement, The New York Times, The Los Angeles Times, and Practical Ethics, as well as the academic journals Philosophical Studies, Journal of the American Philosophical Association, Philosophers’ Imprint, Synthese, Neuroethics, and The Journal of Ethics.